computer music

I’m contributing to instrument design for Anne Hege’s opera The Furies using ChucK and GameTrak controllers. Check it out at laptopera.org.

I perform as part of the Google Mobile Orchestra. We primarily use tether controllers with Android tablets running MobMuPlat and a custom synth designed by Dan Iglesia, the GMOrk leader. I also performed as a guest artist in Sideband’s Bay Area tour in fall of 2018.

Haptic 3D Music

While at Iowa State University I was an RA and student of Dr. Christopher Hopkins, PI of Virtual Environment Sound Control. I contributed work to the platform for enabling dynamic manipulation of objects in haptic 3D space to spawn and move objects using a stylus.

A Walk

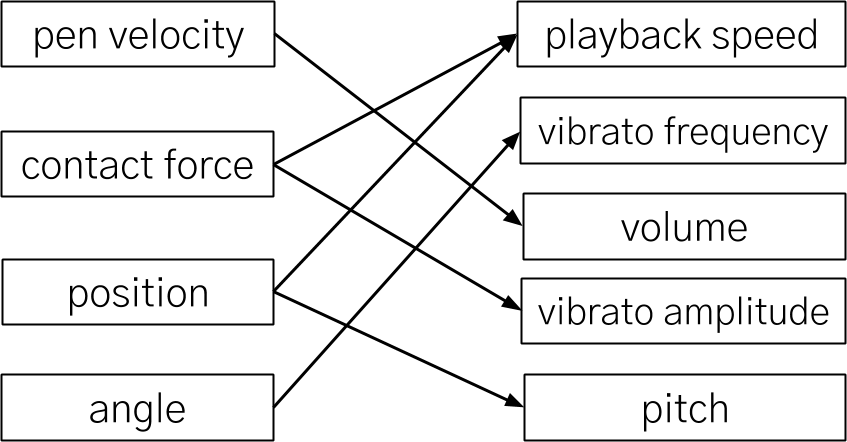

Darren Hushak and I created a piece within VESC, with control in Max/MSP and sound design in Reaktor. This piece is all about textures and mappings. Our objective was to create a virtual musical instrument that maps physical gestures into aurally meaningful and creatively interesting music. In Max/MSP we combined surface parameters like friction, with stylus interaction parameters like force, orientation, and velocity using various mappings for sound synthesis.

The set of available inputs:

- position (x,y,z)

- velocity (x,y,z)

- orientation (x,y,z,a)

- force (x,y,z)

- touched (boolean)

- contact point (x,y,z)

- angle from surface (radians)

- and other context-based extensions

Available outputs:

- note on/off

- pitch (playback speed)

- frequency modulation (amplitude and frequency)

- ADSR envelope parameters

- sample volume

Mappings can be convergent (many-to-one), divergent (one-to-many), simple (one-to-one), or multivergent (a combination).

“A Walk” uses complex, primarily convergent mappings. We demonstrated it at the SEAMUS Concert 2013 at McNally Smith College of Music.

Euc-grid

I created a checkerboard-like layout and used my newly-written features of object spawning and movement to control the x-y pair inputs to a Euclidean rhythm generator.

electroacoustic composition

Two pieces of mine, “Etude” and “Chimeric Devotion.”

live coding

I’ve done some live coding in Impromptu and Extempore. Here is a piece I made called “Bight”

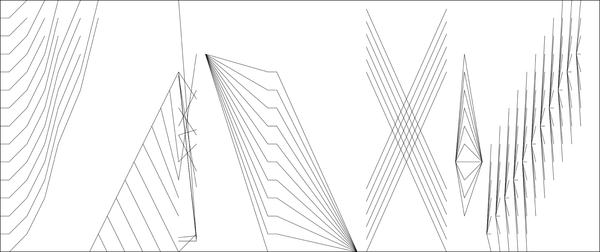

pitch trail composer

I made an application in Max/MSP that allows you to draw lines on a canvas and play them back interpreted as glissandi in the time:pitch coordinates.

cmix

I translated many of the examples in Heinrich Taube’s “Notes from the Metalevel” from lisp to PyRTCMix. I implemented several functions from the Common Music API as well, most notably nth-order Markov chaining. You can see the code on GitHub.

pops

My very first music technology project. Using one 800 ms sample of a “pop” sound, I created a single-timbred sampling instrument reminiscent of a marimba. Using Audacity, I cleaned up the sample and pitch shifted to a three-octave set of notes. This is a piece I wrote to display it, with tracking done in Pro Tools.